IT Support

Modern IT Support for the Modern SMB

Technology Should Always Drive Your Business Forward - IT Should Never Hold You Back

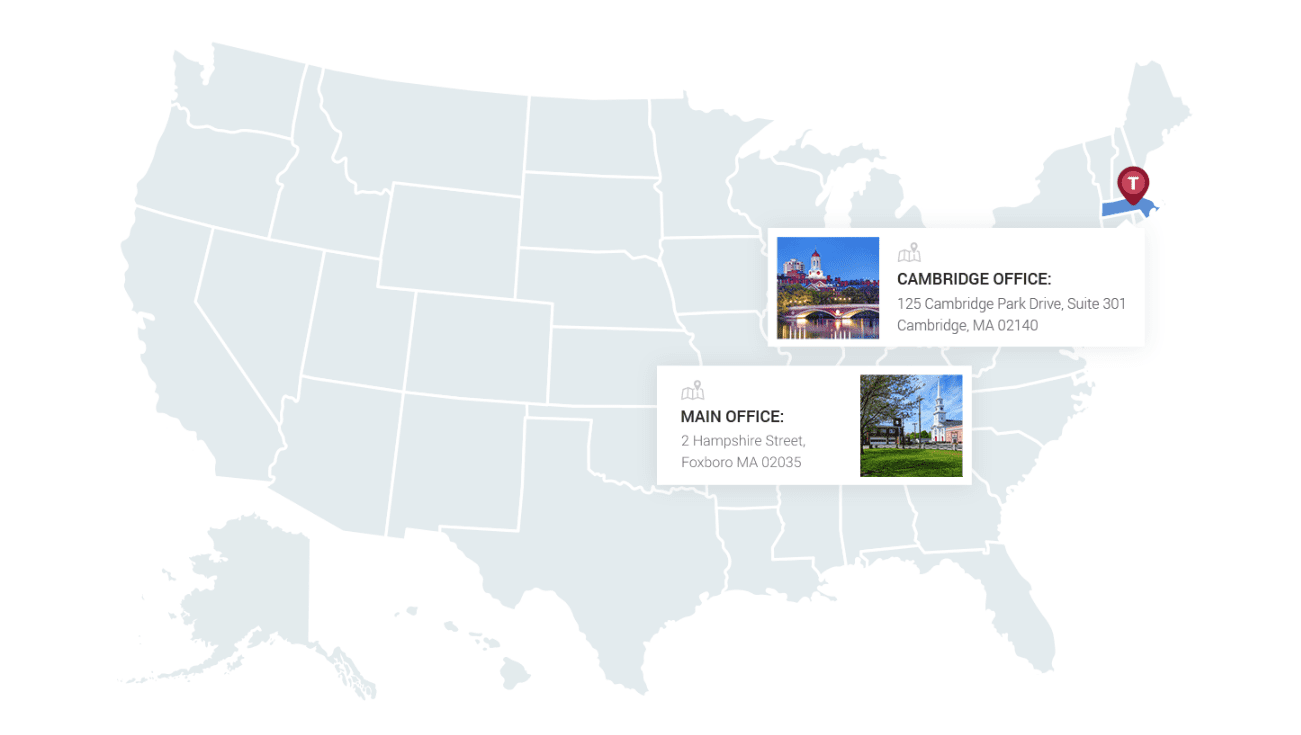

Managed IT support is a way to remove as many of the day-to-day stresses from your life as possible so that you can focus your team's attention, creativity and energy on the most important things: running your business. But at the same time, that's easier said than done when you're constantly worrying about issues like backup and disaster recovery, how to manage the Pandora's box that is your bring-your-own-device environment, or the cyber security issue, which seems to be getting worse by the day. At Technical Support International, we help organizations throughout Boston solve technology issues like these every single day.

Our Core Competencies & Services

Industries Served Locally & Nationwide

Cybersecurity Services

IT Support & Cybersecurity

Your business has enough to worry about without the need for managing day-to-day computer maintenance and becoming information technology experts.

Our services allow you to focus all of your energy on the continued growth and success of your organization, leaving the maintenance and technical support of your IT to us.

Cyber Security Services

TSI’s Managed Security Services plans minimize the impact of today’s most common security threats so you can peacefully focus on growing your business.

governance & it compliance

TSI specializes in helping SMBs meet their federal, state or industry compliance mandates and information regulatory requirements.

mobile device management

TSI’s engineers are trained to manage the complexities to safeguard mobile device data, as well as educate users on the advantages of mobile device computing.

vCIO/vCISO Services

Between daily tasks and an ever-evolving cybersecurity business technology landscape, it’s easy to lose touch with the latest technological offerings.

managed services

Our experts use state-of-the-art IT tools to monitor and manage your servers, workstations, network devices and all next generation mobile platforms around the clock.

cloud services

Our cloud service professionals help weigh these factors and provide the information needed to make an informed decision for your organization’s objectives.

Backup & Disaster Recovery

We prepare organizations for worst case scenarios by pairing them with the technology solutions needed to meet their expectations for uptime and data retention.

contracted services

TSI’s Project Management team works with your business to implement a solution that addresses your objectives and enables your IT staff to focus on their core competencies.

W ho We Are

Since 1989, Technical Support International (TSI) has been a leading Managed IT Service Provider, proudly serving the IT support needs of hundreds of New England SMBs. The foundation of our success has been, and always will be, our dedicated and knowledgeable team of engineering support professionals.

Our Core Values

Their individual and collective capabilities assist clients with everything from day-to-day computer support to complex technological challenges. Our most valued assets are the relationships and trust we build with our clients, employees, and our respective communities.

Our years of business technology experience will ensure your ease of mind and guarantee your IT strategy is aligned with your business objectives.

If you are seeking a trusted managed IT services provider, or have questions about how we’ve helped other organizations make the most of their technology investments, please contact us. We are here to help.

W hy TSI?

STANDARDS

TSI practices the highest industry standards for cybersecurity; we practice what we preach.

SOLUTIONS

CISSP led, on-staff security compliance team; your one stop for everything CMMC.

EXPERTISE

Extensive military & government expertise; Top DoD Primes, U.S Army, Air Force & Navy

EXPERIENCE

30-year partner to over 100 small businesses & manufacturers

Additional Areas of Expertise

R esults

That Speak for Themselves

W e Don't Have Vendors

We Have Partners

TSI is proud to partner with many of the leading enterprise technology vendors to provide the IT support needed to fulfill our clients' needs. Below are just a few of the many organizations that help contribute to the success of our clients:

L atest news

PCI Compliance Updates Coming in April 2024

TSI Joins Forces with PreVeil to Streamline DoD Contractor Compliance with NIST 800-171 and CMMC 2.0

TSI Employee Spotlight: Logan Abell

Featured in: